Table of Contents

I. Introduction

Purpose & Scope

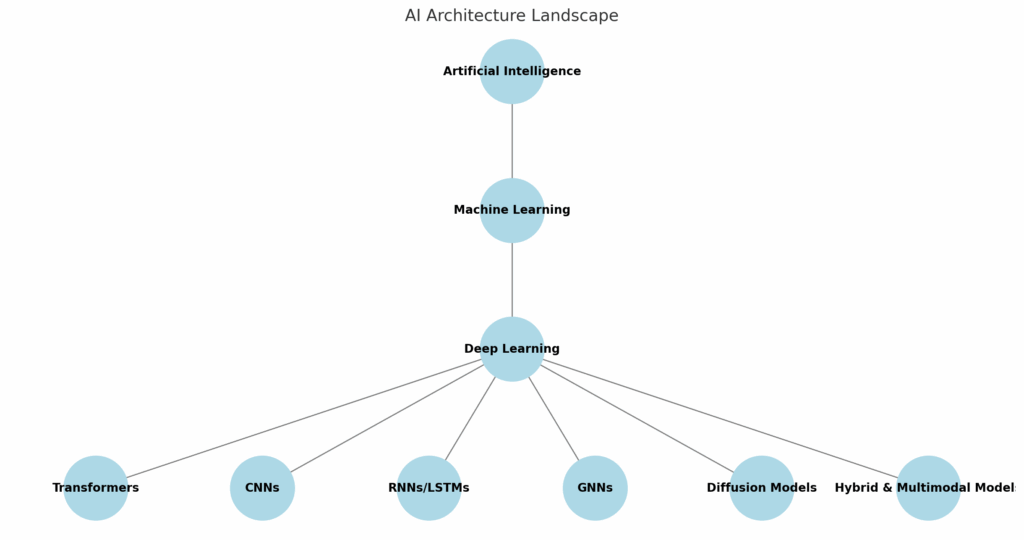

Artificial Intelligence (AI) has rapidly evolved from research lab curiosity to real-world necessity. Whether it’s powering search engines, self-driving cars, chatbots, or medical breakthroughs, AI models now touch nearly every industry. This article provides a clear, structured overview of the architectural foundations that make these intelligent systems work. Rather than dive into code or algorithms, we focus on the big-picture design of AI systems—what kinds of models exist, how they function, and where they are already in use.

The Fragmented but Evolving Landscape

AI isn’t one single technology—it’s a constellation of specialized systems, each designed to handle a particular type of task. From vision-based neural networks to conversational transformers to decision-making agents, different architectures thrive in different domains. But as models grow more capable, we’re witnessing an emerging trend toward convergence, where a handful of powerful model types are becoming operational standards across disciplines.

Generative AI: The Breakout Subset

Among the many architectural branches of AI, generative AI has gained the most attention in recent years. These are the models that generate text, images, code, music—even video. Powered by transformers and diffusion-based architectures, generative AI is now fueling a new wave of creativity and automation. While this article introduces the major technologies behind generative AI, a follow-up article will offer a dedicated exploration of Generative AI Architecture and its impact on industries and innovation.

What to Expect in This Guide

This article explores seven foundational AI model families, each with its own architecture and real-world impact:

- Transformer-Based Models – The powerhouses behind large language models like GPT, BERT, and DeepSeek

- Diffusion Models – Used for creative content generation in tools like DALL·E and Midjourney

- Convolutional Neural Networks (CNNs) – Key players in image recognition and real-time visual systems

- Recurrent Neural Networks (RNNs) and LSTMs – Historic leaders in speech and time-series data processing

- Reinforcement Learning Models – Engines of decision-making in games, robotics, and autonomous systems

- Graph Neural Networks (GNNs) – Essential for relational reasoning in social networks and drug discovery

- Hybrid & Multimodal Models – Cutting-edge systems that combine vision, language, and code processing in a unified architecture

We’ll not only explain how each model works but also highlight operational systems—like Tesla’s Autopilot, OpenAI’s ChatGPT, DeepMind’s AlphaZero, and Google’s PaLM-E—that put these architectures into practice. In the final sections, we’ll look ahead to future trends, such as sparse expert models (like DeepSeek-MoE), quantum AI, and explainable architectures.

II. Overview of Current AI Architectures and Operational Models

A. Transformer-Based Models

Concept

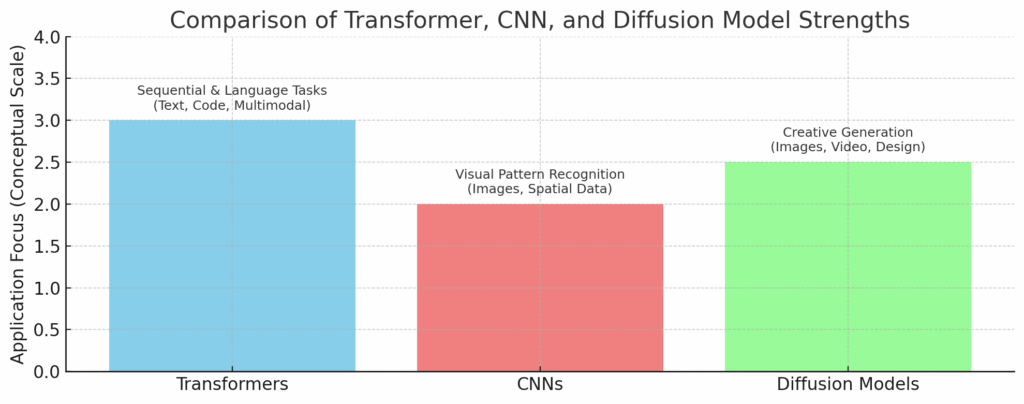

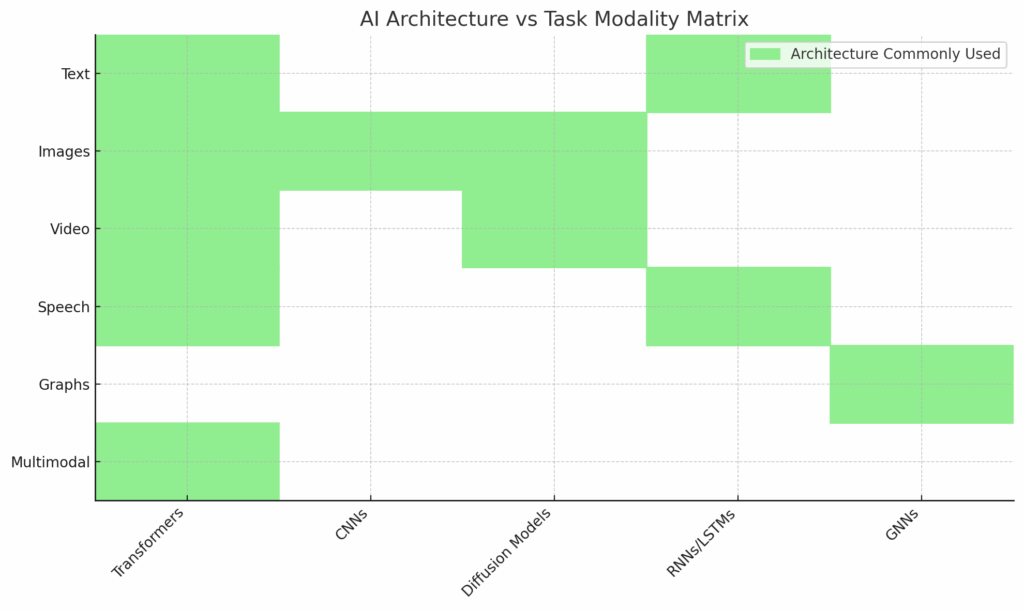

Transformers have revolutionized the field of AI by enabling models to understand context over long sequences of data. Introduced in 2017 through the groundbreaking paper “Attention Is All You Need,” transformer architectures use a self-attention mechanism to evaluate relationships between all elements in an input—whether words, pixels, or tokens. This approach has become the backbone of modern natural language processing (NLP), code generation, and increasingly, image and multimodal applications.

Transformers are highly scalable, support parallel computation, and are easily fine-tuned for specific tasks. As a result, they now serve as the architectural foundation for many of the world’s most powerful AI systems.

Key Architectures and Operational Systems

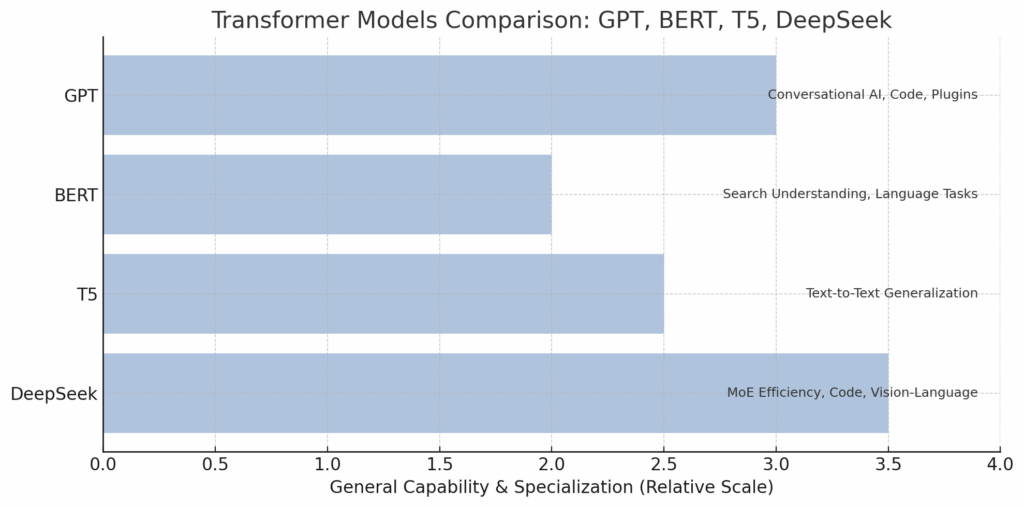

1. GPT (Generative Pre-trained Transformer) – OpenAI

- ChatGPT: Built on GPT-3.5 and GPT-4, this conversational agent powers real-time dialogue, coding assistance, writing tools, and plugins across industries.

- GPT-3 / GPT-4 APIs: Used in education, healthcare, customer support, and product development platforms to automate language-intensive tasks.

2. BERT (Bidirectional Encoder Representations from Transformers) – Google

- Google Search: BERT helps Google understand natural language queries by capturing bidirectional context.

- Hugging Face Ecosystem: Numerous language models (DistilBERT, RoBERTa, etc.) are derived from BERT for classification, translation, and Q&A systems.

3. T5 (Text-to-Text Transfer Transformer) – Google Research

- Reformulates every NLP task as a text-to-text problem, making the architecture extremely flexible.

- TensorFlow Implementations: Widely used in academic research and enterprise NLP experimentation.

4. DeepSeek – Open-Source (Chinese Research Group)

- DeepSeek-MoE: A sparse Mixture of Experts (MoE) transformer that activates only portions of the model for each query, drastically improving computational efficiency at scale.

- DeepSeek-Coder: Specializes in generating programming code, rivaling Codex and GitHub Copilot.

- DeepSeek-VL: A vision-language model capable of image captioning, visual reasoning, and instruction following.

- DeepSeek distinguishes itself by making sophisticated models openly available and showing how sparse architectures can outperform traditional dense transformers with lower resource requirements.

Why It Matters

Transformer models now dominate AI development across sectors. Their modular, scalable nature makes them adaptable for tasks far beyond language—extending into images, audio, code, and decision-making. With innovations like DeepSeek’s MoE and GPT’s plugin architecture, transformers are evolving toward general-purpose intelligence engines.

B. Diffusion Models

Concept

Diffusion models are a class of generative AI systems that create high-quality data—typically images or video—by starting with random noise and gradually refining it through a learned denoising process. Inspired by physical diffusion processes (like the spread of particles), these models learn to reverse the noise step-by-step, reconstructing coherent output from randomness.

Unlike transformers, which model sequences directly, diffusion models excel at generating continuous and high-resolution data, making them especially effective in creative and design-focused applications.

How They Work

Training involves two stages:

- Forward Process: Gradually add noise to training data (e.g., images) over many steps.

- Reverse Process: Train the model to denoise and recover the original data step-by-step.

This produces a system capable of generating entirely new content by sampling from pure noise and refining it iteratively.

Operational Systems

1. Stable Diffusion – Stability AI

- Open-source image generation model with customizable prompts.

- Powers countless community-driven apps, plugins, and experiments.

- Known for local use and integration into creative workflows without reliance on cloud APIs.

2. DALL·E – OpenAI

- One of the first high-profile diffusion models for image generation from text prompts.

- Combines transformer-based encoding of prompts with a diffusion-style image generator.

- Available in ChatGPT Pro and API integrations.

3. Midjourney – Independent Lab

- Specializes in artistic and stylized image synthesis with a strong visual aesthetic.

- Uses a proprietary diffusion engine, accessed via Discord.

- Widely adopted in design, advertising, and concept art.

Why It Matters

Diffusion models represent a major leap in creative AI, shifting the focus from text-only generation to multimodal expression. Their open-source nature (e.g., Stable Diffusion) has fueled a grassroots ecosystem, while proprietary systems like Midjourney demonstrate the demand for AI-generated art and branding assets. These models are also being extended into video generation, 3D modeling, and even drug design, making them one of the most versatile innovations in recent AI development.

C. Convolutional Neural Networks (CNNs)

Concept

Convolutional Neural Networks (CNNs) are specialized deep learning architectures designed to process grid-like data, such as images. Unlike fully connected networks, CNNs use convolutional layers to detect local patterns—edges, textures, shapes—by scanning small filters across the input. As these features stack, CNNs build a hierarchical understanding of visual content.

This makes CNNs ideal for tasks involving image recognition, object detection, facial analysis, and even medical diagnostics.

How They Work

CNNs rely on three main components:

- Convolutional layers to extract local features

- Pooling layers to reduce dimensionality and retain key signals

- Fully connected layers to interpret the extracted features for classification or prediction

Variants like ResNet, VGG, and Inception have become benchmarks in visual tasks.

Operational Systems

1. Google Cloud Vision API

- Offers image labeling, face and object detection, and OCR capabilities.

- Uses CNN-based models trained on large-scale datasets to interpret visual data across a range of industries.

2. Microsoft Azure Computer Vision

- Provides AI services for spatial analysis, content moderation, and image metadata extraction.

- Employs advanced CNNs to classify, caption, and describe images automatically.

3. Tesla Autopilot

- Integrates CNNs for real-time visual perception of roads, vehicles, signs, and pedestrians.

- Runs on edge hardware inside vehicles, optimized for rapid inference under strict latency constraints.

- Combines camera feeds from multiple angles into a CNN-based processing pipeline to support autonomous driving decisions.

Why It Matters

Before transformers took center stage, CNNs were the gold standard for computer vision—and they still are in many production systems. Their efficiency, interpretability, and hardware-compatibility make them well-suited for embedded AI in mobile devices, smart cameras, and autonomous systems. While vision transformers (ViTs) are gaining traction, CNNs remain the core visual backbone in applied AI.

D. RNNs & LSTMs (Recurrent Neural Networks / Long Short-Term Memory Networks)

Concept

Before transformers reshaped the AI landscape, Recurrent Neural Networks (RNNs) and their advanced variant, Long Short-Term Memory (LSTM) networks, were the dominant architecture for handling sequential data—particularly in speech recognition, natural language processing, and time-series forecasting.

Unlike CNNs and transformers, RNNs process input one step at a time, preserving context through a looping internal state. This allows them to “remember” previous inputs and apply them to later decisions.

LSTMs were introduced to solve the vanishing gradient problem, which made it difficult for basic RNNs to capture long-term dependencies in sequences. They introduced a memory cell and gating mechanisms to better regulate information flow.

How They Work

- RNNs pass hidden state information from one step to the next.

- LSTMs improve upon this with specialized gates (input, output, forget) that control which data to keep or discard.

Although powerful, RNNs and LSTMs are sequential by nature and do not lend themselves well to parallelization, which made them less efficient than transformers in large-scale training environments.

Operational Systems

1. Google’s Early Speech Recognition Systems

- Used LSTM-based architectures to transcribe spoken words with high accuracy.

- Served as a foundation for Android’s voice assistant technology.

2. Mozilla DeepSpeech

- An open-source speech-to-text engine that originally employed RNN and LSTM layers.

- Designed for transparency and offline accessibility.

3. Early Voice Assistants (Siri, Alexa, Cortana)

- The first generations of voice-activated systems used LSTM models for language parsing and speech synthesis.

- These have since transitioned to transformer-based backends in most modern implementations.

Why It Matters

RNNs and LSTMs represent an important evolutionary stage in AI—they paved the way for modern sequence modeling and inspired the architectural innovations seen in transformers. While they’re now largely replaced in large-scale NLP, they still play a role in resource-constrained environments, low-latency systems, and embedded devices. Understanding RNNs also provides crucial historical context for today’s AI capabilities.

E. Reinforcement Learning Models

Concept

Reinforcement Learning (RL) is a framework where AI agents learn by interacting with an environment, making decisions to maximize a cumulative reward. Unlike supervised learning, where correct answers are provided, RL agents must discover optimal strategies through trial and error—similar to how humans learn through experience.

RL is especially useful in dynamic, sequential decision-making tasks, where the outcome depends on a series of actions rather than a single prediction.

How It Works

- An agent receives a state from the environment.

- It selects an action based on its current policy.

- The environment returns a new state and a reward.

- Over time, the agent refines its policy to maximize rewards.

Popular RL algorithms include Q-learning, Policy Gradients, and Actor-Critic methods. In many cases, deep neural networks are used as function approximators, leading to the field of Deep Reinforcement Learning.

Operational Systems

1. AlphaGo & AlphaZero – DeepMind

- AlphaGo famously defeated world champion Go player Lee Sedol in 2016.

- AlphaZero expanded the concept, mastering chess, shogi, and Go from scratch using self-play reinforcement learning.

- These systems demonstrated that RL could discover superhuman strategies with no prior human data.

2. OpenAI Five

- A reinforcement learning system trained to play the complex, real-time strategy game Dota 2.

- Used self-play, curriculum learning, and massive parallel simulations to achieve near-professional performance.

- Highlighted RL’s potential for multi-agent environments and real-time planning.

3. Robotics and Autonomous Systems

- RL principles are widely used in simulated robotics, such as teaching robotic arms to grasp objects or balance.

- RL also contributes to autonomous driving prototypes, especially for decision-making tasks like lane changes or obstacle avoidance.

- While real-world RL training is resource-intensive and risky, simulation environments like MuJoCo and Isaac Gym are accelerating research and deployment.

Why It Matters

Reinforcement learning offers a unique approach to AI: learning through experience, not just data. While computationally demanding, it’s the go-to architecture for complex control problems, adaptive agents, and self-improving systems. As simulation technology improves and safety constraints are better handled, RL is expected to play a key role in robotics, finance, logistics, and advanced planning applications.

F. Graph Neural Networks (GNNs)

Concept

Graph Neural Networks (GNNs) are designed to process data structured as graphs—networks of interconnected nodes and edges. Unlike traditional neural networks that operate on grids (like images) or sequences (like text), GNNs excel at capturing relationships and interdependencies in non-Euclidean data.

This makes them ideal for applications where structure matters more than order—like social networks, recommendation engines, molecular analysis, and knowledge graphs.

How They Work

- Each node in a graph has features and is connected to other nodes.

- GNNs update each node’s representation by aggregating information from its neighbors, passing “messages” across the graph structure.

- Multiple layers allow the network to capture increasingly global context.

Variants include Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and Message Passing Neural Networks (MPNNs), each optimized for different propagation and weighting strategies.

Operational Systems

1. Social Network Recommendations

- Facebook: Uses GNN-inspired architectures to power friend suggestions, community recommendations, and content ranking by modeling the social graph.

- LinkedIn: Employs GNNs in its “People You May Know” feature and to improve job recommendations by understanding connections between users, roles, and companies.

2. Drug Discovery and Chemistry

- AtomNet (by Atomwise): Applies GNNs to model molecules as graphs, predicting how chemical compounds interact with proteins.

- This speeds up pharmaceutical research by identifying potential drug candidates computationally—before expensive lab testing.

3. Knowledge Graph Applications

- Enterprises use GNNs to reason over structured knowledge bases, enabling AI systems to answer complex queries with contextual understanding.

- Used in question answering systems, chatbots, and semantic search.

Why It Matters

In a world overflowing with interconnected data, GNNs enable AI to reason relationally—a crucial capability not offered by CNNs or transformers out of the box. They’ve become indispensable in sectors like biotech, e-commerce, fintech, and enterprise intelligence. As AI moves toward systems that need to understand context, hierarchy, and influence, GNNs provide the architecture for structured thinking.

G. Hybrid & Multimodal Models

Concept

Hybrid and multimodal models represent the next evolutionary step in AI architecture—combining multiple types of input (text, images, video, code, audio) and multiple neural network types within a single system. These architectures are designed to reflect how humans think: not in isolated silos of vision or language, but as a blend of modalities that inform one another.

Some hybrid models fuse architectures (e.g., transformers + CNNs), while others unify inputs (e.g., vision-language models). This approach is essential for building general-purpose AI systems capable of perception, reasoning, and communication across contexts.

How They Work

- Use a shared embedding space where inputs of different types (e.g., images and text) are encoded into compatible representations.

- Apply cross-modal attention or fusion layers to integrate and relate those inputs.

- The final output is context-aware across multiple modalities, enabling tasks like image captioning, visual Q&A, and prompt-driven video generation.

Operational Systems

1. CLIP (Contrastive Language–Image Pretraining) – OpenAI

- Trained on images and associated text captions to learn visual concepts from natural language.

- Powers features like image classification via text prompts and zero-shot learning.

- Forms the foundation for tools like DALL·E and OpenAI’s visual understanding capabilities in ChatGPT.

2. PaLM-E – Google

- A robot-embodied, multimodal transformer that processes both visual input and language instructions.

- Integrates models for reasoning, image recognition, and action planning.

- Represents one of the first large-scale embodied AI systems designed for real-world tasks.

3. Imagen Video – Google

- A video-generation system that combines diffusion models with transformer encoders, generating coherent video clips from text prompts.

- Demonstrates the growing convergence between generative AI and multimodal synthesis.

Why It Matters

As AI becomes more human-like in capability, hybrid and multimodal architectures are essential for contextual understanding and creativity. These systems are unlocking new possibilities in robotics, education, entertainment, accessibility, and beyond. They’re also laying the groundwork for Artificial General Intelligence (AGI) by integrating perception, cognition, and action into unified frameworks.

For a broader visual classification of machine learning models, see our article on the MIT Periodic Table of Machine Learning.

III. Convergence and Model Agnosticism

No One-Size-Fits-All — Yet

Each AI architecture we’ve explored—transformers, CNNs, diffusion models, RNNs, GNNs—was designed for a specific type of data and task. Historically, this led to high specialization: CNNs for vision, RNNs for sequences, GNNs for networks, and so on. But as AI has matured, these boundaries are beginning to blur.

The Rise of the Transformer Everywhere

Transformer-based models, originally developed for natural language processing, are increasingly applied to non-text domains.

- Vision Transformers (ViTs) now rival CNNs in image classification.

- Models like DeepSeek-VL and PaLM-E show transformers handling multimodal inputs with remarkable fluency.

- Even diffusion models often use transformers as encoders or prompt handlers, blending generative modeling with sequential reasoning.

Transformers are proving adaptable, scalable, and modular—qualities that suit the demands of complex, real-world systems.

Efficiency is the New Frontier

- Sparse expert models like DeepSeek-MoE and Google’s Switch Transformer selectively activate small parts of the network, reducing computational overhead while maintaining performance.

- Distilled models like DistilBERT shrink large models into smaller, faster variants.

- Hybrid systems combine the right tool for each job: CNNs for early visual processing, transformers for interpretation, and GNNs for relationship modeling.

As models grow in size and ambition, efficiency is becoming a core architectural priority.

This shift favors architecture-agnostic pipelines—designs that don’t rely on one model type, but orchestrate many based on task, context, and available resources.

Real-World Systems Reflect Convergence

Today’s most advanced AI systems already reflect this convergence:

- Tesla’s Autopilot blends CNNs, transformers, and rule-based systems.

- OpenAI’s stack integrates transformers with embedding lookups, diffusion generators, and plugin tools.

- Google’s Gemini and Anthropic’s Claude are built around transformer cores but designed to integrate future modalities and architectures.

Rather than choosing one model to rule them all, AI engineering is moving toward architecture ecosystems—modular systems where different components handle different layers of intelligence.

IV. Future Trends in AI Architecture

As AI continues to advance, new architectural frontiers are emerging. These trends reflect both technical necessity—such as scaling efficiency—and conceptual ambition, such as building AI that can think, see, speak, and act within a unified framework.

A. Unified Multimodal Models

The Vision

Instead of building separate models for language, images, audio, and video, the future points to single models that handle all modalities. These models understand not only what you say, but what you show—and they can respond across channels.

Emerging Systems

- GPT-4 with Vision: Extends the capabilities of ChatGPT to understand images, diagrams, and screenshots.

- Google’s PaLM-E: Integrates robotic actions, language reasoning, and visual input in a unified architecture.

- Gemini (Google DeepMind): A family of models built to fuse modalities and memory into one intelligent agent.

Unified multimodal systems will be central to embodied AI, virtual assistants, and advanced educational or creative tools.

B. Quantum AI

A Glimpse of What’s Next

Quantum AI refers to the use of quantum computing to accelerate or expand AI capabilities. While still in its infancy, quantum methods hold promise for:

- Solving optimization problems beyond classical capabilities

- Reducing the training time of large models

- Simulating complex biological or physical systems

Early Explorations

- IBM Quantum: Offers quantum computing platforms with AI-focused research programs.

- Google Quantum AI: Working toward “quantum supremacy” for select ML applications.

- Hybrid models pairing classical neural networks with quantum subroutines are under early-stage experimentation.

Quantum AI may eventually reshape the foundations of architecture design, but its practical impact remains speculative for now.

C. Sparse & Expert Models

Smarter Scaling

As the cost of running large models becomes unsustainable, researchers are shifting toward sparse models that selectively activate only the necessary “experts” for a given task.

Key Developments

- DeepSeek-MoE: Activates only a few expert layers per query, dramatically improving compute efficiency.

- GShard and Switch Transformer: Google’s innovations in sparsely gated models for scalable training.

- These models achieve GPT-level output with fractional compute costs, a major breakthrough for deployment at scale.

Sparse architectures may soon dominate enterprise AI, where cost, speed, and sustainability are paramount.

D. Lightweight & Edge-Optimized Models

AI at the Edge

With growing demand for real-time AI in mobile devices, IoT, and embedded systems, efficient models are more important than ever.

Model Examples

- MobileNet and EfficientNet: Lightweight CNNs built for fast inference with limited resources.

- DistilBERT: A compressed version of BERT that retains much of its performance with fewer parameters.

Expect to see more architectures tailored for on-device processing, offline inference, and low-latency interaction.

E. Self-Supervised, Federated, and Ethical AI

Learning Without Labels

- Self-supervised learning allows models to learn from vast amounts of unlabeled data, mimicking human exploratory learning.

- Key examples: SimCLR, BYOL, and the pretraining regimes of GPT-like models.

Privacy-Preserving Training

- Federated learning enables AI systems to learn across distributed devices (e.g., smartphones) without centralizing user data—enhancing both privacy and efficiency.

- Used by companies like Apple and Google for on-device personalization.

Transparency and Accountability

- Explainable AI (XAI) and Ethical AI are growing fields focused on ensuring models are understandable, fair, and aligned with human values.

- Emerging architectures may include built-in interpretability and safety mechanisms as standard.

Pulling the Future Together

These architectural trends—whether it’s unifying modalities, optimizing scale through sparsity, or pushing computation to the edge—point toward a new era of AI design. The future won’t be dominated by a single model or paradigm but by flexible, efficient systems that can adapt to a wide range of tasks, devices, and ethical requirements.

From quantum exploration to self-supervised learning, today’s research is laying the groundwork for architectures that are not only smarter and faster—but also safer, more accessible, and more context-aware. Understanding these trajectories now will prepare technologists, developers, and strategists to navigate the next decade of AI with confidence.

V. Conclusion

Recap: A Diverse and Evolving Architecture Landscape

Artificial intelligence is not one monolithic system—it is a constellation of architectures, each engineered for different types of data, environments, and use cases. From the attention-driven power of transformers to the structure-focused insights of GNNs, each model contributes a unique strength to the broader AI ecosystem. We’ve examined how these models power real-world systems—everything from ChatGPT and Tesla’s Autopilot to drug discovery engines and recommendation algorithms.

Specialization Meets Convergence

The boundaries between models are dissolving. Transformers are being extended into vision, speech, and multimodal domains. CNNs still dominate efficient vision systems, but they now share space with vision transformers and diffusion-based image generators. Even legacy architectures like RNNs have influenced how we think about sequence modeling in modern contexts. The trend is clear: AI design is moving toward adaptable, modular systems, where the best architecture for the job is selected or integrated dynamically.

Looking Forward

Efficiency, scale, ethics, and cross-modal intelligence will shape the next decade of AI. Innovations like Mixture of Experts models (e.g., DeepSeek-MoE) and edge-ready networks (e.g., MobileNet) are making AI faster, smaller, and more practical. Meanwhile, unified multimodal models are pushing us closer to systems that can reason and interact like humans.

Whether you’re building AI tools, designing enterprise infrastructure, or simply trying to stay informed, understanding these architectural foundations gives you a map of where we are—and a compass for where we’re going.

📘 Need a refresher on the basics?

Our Tech FAQ covers AI, automation, cybersecurity, and more — in plain English. Perfect for developers, students, and lifelong learners.

Enjoyed this post? Sign up for our newsletter to get more updates like this!

🔐 Protect Your Privacy

NordVPN is fast, secure, and trusted by millions worldwide.

Get NordVPN →Sponsored Content