Table of Contents

Introduction

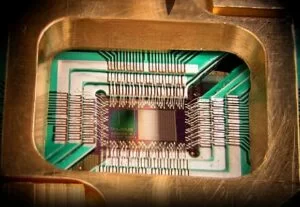

Quantum Computing and Artificial Intelligence (Quantum AI) represents a groundbreaking fusion of quantum computing and artificial intelligence (AI), marking a significant leap forward in technology. Unlike traditional computers that process bits, quantum computers utilize the principles of quantum mechanics, such as superposition and entanglement, to dramatically increase processing power. Consequently, this quantum leap enables machines to perform complex calculations at unprecedented speeds. Simultaneously, AI leverages sophisticated algorithms to mimic the way neurons work in the human brain, enhancing capabilities in machine learning, pattern recognition, and problem-solving.

Moreover, combining quantum computing and AI opens up extraordinary opportunities, potentially transforming fields from drug discovery to space exploration by making AI algorithms vastly more powerful. At this pivotal crossroads of quantum and AI technologies, it’s crucial to explore both the vast potential and the wide-ranging implications of Quantum AI. Indeed, these advancements promise to redefine the boundaries of scientific and technological innovation.

Quantum Computing Enhancements in AI Algorithms

Quantum Machine Learning

Another key area of this intersection is quantum machine learning, which integrates quantum algorithms into machine learning processes. Specifically, Quantum machine learning exploits the inherent parallelism of quantum states, enabling the efficient processing of complex, high-dimensional datasets. Furthermore, quantum-enhanced machine learning algorithms, such as quantum neural networks and quantum support vector machines, show potential to accelerate learning processes and optimize algorithmic efficiency.

Quantum Optimization

Moreover, quantum optimization algorithms harness quantum tunneling and superposition to navigate complex optimization landscapes, benefiting AI applications involving combinatorial optimization problems. Recently, breakthroughs in quantum-enhanced algorithms, like quantum annealing and variational quantum eigensolvers, have demonstrated significant advancements in optimizing neural network parameters and unsupervised learning scenarios, such as clustering and dimensionality reduction.

Quantum Approximate Optimization Algorithm (QAOA)

Introduction to QAOA

One notable hybrid hybrid quantum-classical algorithm designed to solve combinatorial optimization problems is the Quantum Approximate Optimization Algorithm (QAOA). Developed by Farhi, Goldstone, and Gutmann, it uses quantum states to explore potential solutions efficiently.

How QAOA Works

Initially, the algorithm starts by preparing a superposition of all possible solutions using qubits. Next, a series of quantum gates are applied to evolve these states. Additionally, the parameters of these gates are optimized using classical methods. Finally, the quantum state is measured repeatedly to find the best solution.

Applications and Advantages

Today, the QAOA approach proves useful in solving complex problems like the Max-Cut problem and portfolio optimization. It offers potential speed-ups and scalability over classical algorithms. However, its effectiveness depends on advancements in quantum computing hardware, and it currently faces challenges related to noise and error in quantum computers.

Current Limitations:

Due to Hardware Dependency: Its effectiveness is closely tied to the advancements in quantum computing hardware. Additionally, quantum computers are prone to errors and noise, affecting the algorithm’s performance.

Key Takeaways

In essence, QAOA exemplifies how quantum computing can enhance AI algorithms, particularly in optimization tasks. Its development continues to be a focal point in the field of quantum-enhanced AI. The principles of variational techniques and iterative refinement offering profound implications for AI’s evolution. Nevertheless, noise and error in current quantum computers is a limiting factor.

Transitioning from Theory to Practice

To solidify our understanding of the Quantum Approximate Optimization Algorithm (QAOA) and appreciate its practical implementation, it’s crucial to delve into the mathematical framework that underpins it. This section introduces four key mathematical components that are central to executing QAOA effectively.

First, is the Problem Hamiltonian 𝐻𝐶: This Hamiltonian encodes the specifics of the optimization problem we aim to solve, guiding the quantum system towards potential solutions. Second, the Mixer Hamiltonian 𝐻𝐵: Essential for promoting exploration of the solution space, this Hamiltonian ensures that the quantum states can transition between different configurations. Thirdly, the QAOA Circuit: We describe the quantum circuit architecture used to implement the Hamiltonians and control their interactions over the computational process. Finally, is Optimization. Here, we detail the classical optimization techniques applied to fine-tune the parameters that govern the quantum gates within our QAOA circuit, striving for optimal solutions. By examining each of these equations, we can better understand how QAOA leverages the principles of quantum mechanics to address complex optimization tasks that are beyond the reach of classical algorithms.

QAOA operates on the principle of variational techniques, iteratively refining the parameters to converge towards the optimal solution. The depth p of the circuit determines the complexity and potential accuracy of the algorithm. Generally, with higher p typically offers better approximations at the cost of increased quantum resources.

Turning the Math Into Code

Using Qiskit for QAOA

Fortunately, there is an easy-to-use library called ‘qiskit‘ for quickly turning algorithms into usable code. Here’s a brief overview of the process:

1. Define the Graph:

def create_graph(n):

graph = np.zeros((n, n))

for i in range(n):

for j in range(i + 1, n):

if np.random.rand() > 0.5:

graph[i, j] = graph[j, i] = 1

return graph

2. Problem Hamiltonian:

def problem_hamiltonian(qc, gamma, graph):

n = len(graph)

for i in range(n):

for j in range(i + 1, n):

if graph[i, j] == 1:

qc.cx(i, j)

qc.rz(2 * gamma, j)

qc.cx(i, j)

return qc

3. Mixer Hamiltonian:

def mixer_hamiltonian(qc, beta, n):

for i in range(n):

qc.rx(2 * beta, i)

return qc

4. QAOA Circuit and Optimization:

def create_qaoa_circuit(graph, p, gamma, beta):

n = len(graph)

qc = QuantumCircuit(n)

for i in range(n):

qc.h(i)

for _ in range(p):

qc = problem_hamiltonian(qc, gamma, graph)

qc = mixer_hamiltonian(qc, beta, n)

qc.measure_all()

return qc

5. Optimize Parameters and Execute:

def optimize_qaoa(graph, p):

def objective_function(params):

gamma, beta = params

qc = create_qaoa_circuit(graph, p, gamma, beta)

qc = transpile(qc, backend)

qobj = assemble(qc)

result = execute(qc, backend, shots=1024).result()

counts = result.get_counts()

return -compute_expectation(counts, graph)

result = minimize(objective_function, np.random.rand(2), method=’COBYLA’)

return result.x

Advanced Neural Networks and Quantum Computing

An integration of quantum computing with advanced neural network models, such as Quantum Neural Networks (QNNs), promises a paradigm shift in machine learning’s efficiency and complexity. Quantum algorithms enhance learning efficiency in neural networks by leveraging superposition and entanglement, leading to more efficient processing of high-dimensional data spaces and complex patterns.

Neural Network Training Using Quantum Algorithms

Quantum algorithms, like the Quantum Gradient Descent algorithm, navigate the optimization landscape of neural networks more efficiently. As a result, they enhance the convergence rate of learning algorithms. Currently, research explores various architectures, from quantum convolutional neural networks to quantum recurrent neural networks. Each targets different applications and harnesses quantum mechanics for more effective problem-solving.

Synergizing Quantum Computing with AI

The integration of quantum computing with AI significantly enhances capabilities in machine learning, data analysis, and neural network optimization. Quantum algorithms, such as the Quantum Support Vector Machine (QSVM) and Quantum Principal Component Analysis (QPCA), facilitate handling high-dimensional datasets and uncovering patterns within large datasets more effectively.

Leveraging Specific Quantum Algorithms

Furthermore, specific AI algorithms like Grover’s and Shor’s algorithms, demonstrate potential utility in AI tasks such as unstructured data search and cryptographic computations. This synergy promises groundbreaking advancements in fields requiring rapid data processing and complex problem-solving capabilities.

Quantum Algorithms and Computational Complexity

The development of quantum algorithms, such as Shor’s and Grover’s algorithms, represents a pivotal advancement in computational complexity. These algorithms offer solutions to problems intractable for classical computers. Consequently, they necessitate a reevaluation of classical complexity theory, suggesting new complexity classes tailored to quantum computing.

New Quantum Complexity Classes

New complexity classes, such as BQP (Bounded Error Quantum Polynomial Time) and QMA (Quantum Merlin Arthur), capture the unique capabilities of quantum computers. They provide a framework for categorizing problems based on their solvability using quantum algorithms.

Case Studies: Quantum Computing and AI in Action

Healthcare Applications

For example, in healthcare, quantum computing is used to analyze vast genomic datasets. For instance, the collaboration between D-Wave and DNA-SEQ Alliance aims to improve cancer treatment prediction based on genomic data.

Financial Sector Innovations

Next, the finance sector leverages quantum computing for complex risk analysis and optimization problems. JPMorgan Chase & Co. is experimenting with quantum algorithms for credit scoring and derivative pricing.

Enhancing Cybersecurity

Finally, quantum computing strengthens cryptographic systems, with projects like the Quantum Resistant Ledger (QRL) developing blockchain technology secure against quantum-based attacks.

Innovative Research in AI and Quantum Computing

University of Maryland Initiatives

Meanwhile, researchers are exploring quantum algorithms in machine learning for improved pattern recognition and data classification.

IBM Quantum Team Contributions

Simultaneously, IBM is working on quantum-enhanced feature spaces for machine learning, using quantum circuits to map classical data into a quantum feature space.

Similarly, Google AI Quantum focuses on developing quantum neural networks, harnessing quantum properties to create more efficient and adaptive neural networks.

Quantum Computing in AI – The Road Ahead

Quantum-Enhanced Optimization Algorithms

Research teams at MIT and Stanford are focusing on quantum optimization for complex systems, aiming to exploit quantum parallelism for intractable problems.

Quantum-Enhanced Machine Learning

The Quantum Artificial Intelligence Lab, a collaboration between NASA, Google, and USRA, is investigating how quantum computing can accelerate deep learning processes in AI.

Long-Term Goals and Visions

Looking ahead, the long-term goals for quantum computing in AI involve achieving significant breakthroughs in computational speed and problem-solving capabilities, transforming our approach to solving complex problems.

Conclusion

In conclusion, the integration of quantum computing with AI promises to revolutionize various fields, from healthcare to finance to cybersecurity. Ongoing research and development are indicative of a future where these technologies converge to address some of the world’s most complex challenges.

References:

- D-Wave Systems Inc. (2020). “Leveraging Quantum Computing in Genomics.”

- S. Woerner & D.J. Egger (2019). “Quantum risk analysis.” NPJ Quantum Information.

- QRL Foundation (2021). “Quantum Resistant Ledger Whitepaper.“

- Alsing et al. (2019). “Quantum Machine Learning.” University of Maryland.

- Havlicek et al. (2019). “Supervised learning with quantum-enhanced feature spaces.” IBM Quantum.

- Google AI Quantum Team (2018). “Exploring Quantum Neural Networks.”

Enjoyed this post? Sign up for our newsletter to get more updates like this!

🔐 Protect Your Privacy

NordVPN is fast, secure, and trusted by millions worldwide.

Get NordVPN →Sponsored Content